Pacman Capture the Flag

By Erik van der Jagt, Nikki Rademaker, and Yanna Smid

Overview

This project was created for the course Modern Game AI Algorithms. The assignment was to design agents for the Pacman Capture the Flag environment. The agents play both offensively and defensively against another. We developed heuristic agents and agents based on Monte Carlo Tree Search (MCTS).

Gameplay

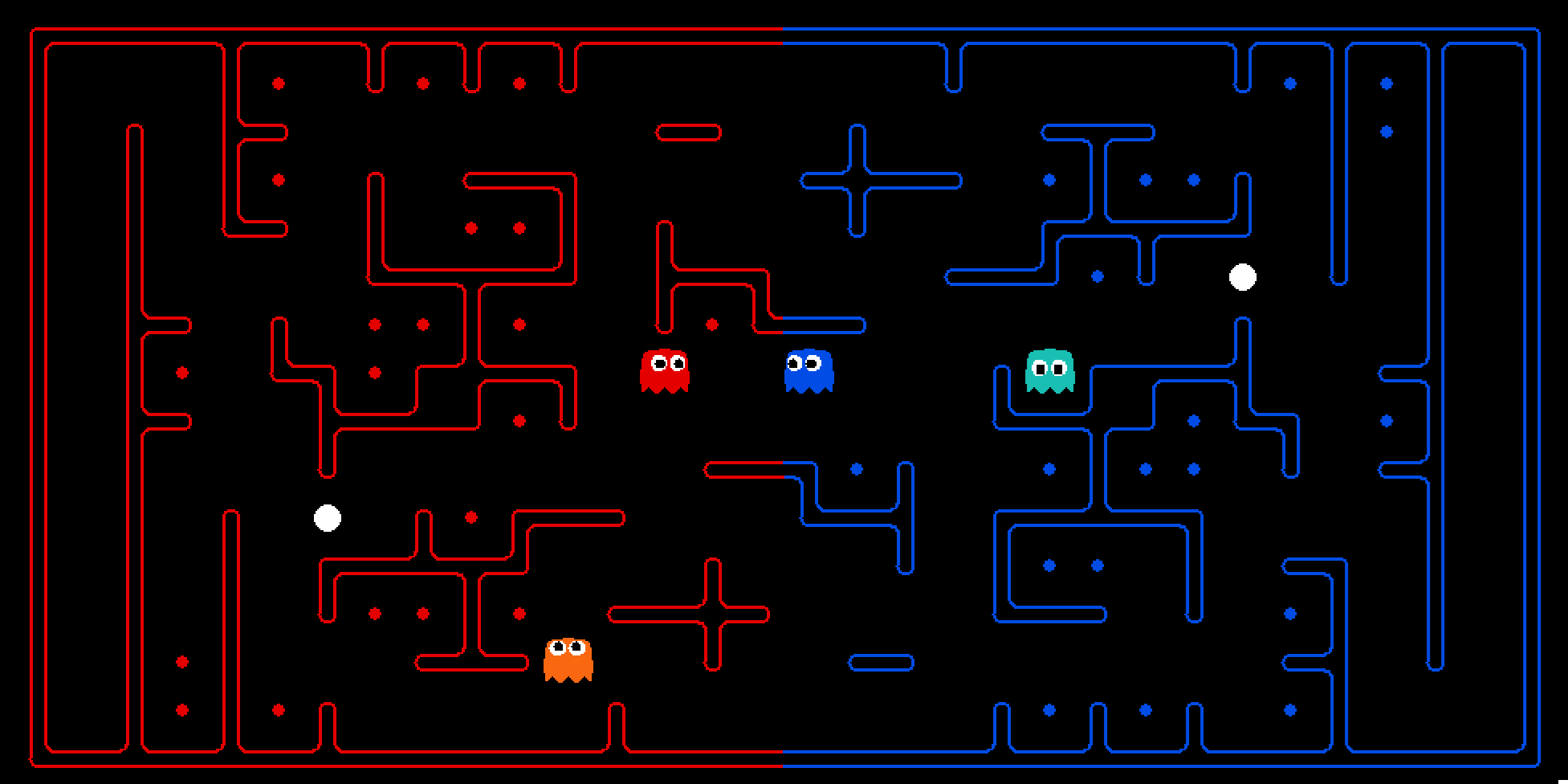

Pacman Capture the Flag is a two-versus-two team game played on a grid map. Each team controls two agents. The map is divided into two halves: red on the left and blue on the right. Agents become Pacman when crossing into the opponent's side and Ghosts when on their own side. Pacmen try to collect food pellets and bring them back home to score points. Ghosts defend their own food and try to catch enemy Pacmen. Each team also has a power capsule. If a Pacman eats a capsule, enemy Ghosts become scared and can be eaten. The game ends when almost all food is eaten, the move limit is reached, or if an agent breaks the move-time rules. The team with the most points wins.

In short, in Capture the Flag, each team controls two Pacman agents. The goal is to steal food pellets from the opponent's side and defend your own side. Depending on where agents are on the map, they either attack or defend. If a Pacman collects a capsule, enemy Ghosts become vulnerable for a short time.

Technical Development

- All agents were programmed in Python.

- The environment used was a modified Capture the Flag project from UC Berkeley obtained from this GitHub repository.

- Agents used perfect information, meaning they could always see all players and the entire game state.

Implemented Agents

We first developed heuristic agents. They made decisions based on features like distance to food, enemies, and capsules. Offensive agents prioritized collecting food, while defensive agents guarded the home base. A combined agent switched between offense and defense depending on the situation.

We also built several MCTS agents. MCTS agents simulated many future game states to decide on the best move. We explored different MCTS variants like vanilla MCTS with random or heuristic rollouts, MCTS with RAVE (Rapid Action Value Estimation), and MCTS with UCB (Upper Confidence Bound).

Experiments and Results

We tested all agents by running a tournament. Agents earned points based on wins, ties, and losses. Heuristic agents generally performed better than MCTS agents. Our best agent was a heuristic agent that started in offense mode. It won the tournament with the highest ELO score.

Reflection

Heuristic agents were more consistent and easier to control. MCTS agents sometimes behaved unpredictably. Adding heuristics to MCTS helped improve their performance. Overall, agents that balanced offense and defense carefully performed best.

You can download the full report here.